The Joy of Automating Deployments

The team I am on at work has recently been on an automation spree. This has stemmed from a disasterous deployment a year ago where things we had tested were broken, things that hadn’t been tested had been deployed and we ultimately ended up rolling the deployment back. A large part of this experience was down to poor code/git hygeine on the part of some members of the development team. But it did hammer home that we needed to change how we were working.

Prior to the failed deployment, builds were deployed to our staging area by whichever developer was doing work on the code at the time. As a result it was often in a weird state, with some features missing or incomplete. The weeks leading up to a release would be spent pulling everything together and doing a massive amount of testing and bug fixing. Due to poor git usage by some members of the team, things would take an age and regressions were not uncommon. Even once we had deployed code it wasn’t uncommon to find an issue we knew had been fixed previously had snuck back onto live.

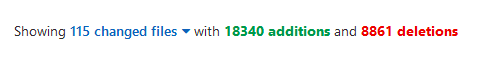

As a result of that failure we reviewed how we worked and made a few changes. Firstly we locked the master branch for all our deployed projects in GitLab. No more pushing your code direct to master - instead you had to submit a merge request and have another team member take a look to see what you were doing was sensible. This alone helped to reduce the “everything and the kitchen sink” commits. Knowing that your commits were going to be reviewed made us make them reasonably sized (eventually). It also meant that they had to be descriptive. It took some time for some of the team to get into the swing of the new process - and a few merge requests got bounced for having no details provided for ~18k lines of changes (in a single commit) and similar sins.

These days things are running far more streamlined though. Everything goes through a review before hitting master. Once the MR gets approved and the code hits master, our CD pipeline will automatically deploy the change to our staging environemnt. We do not deploy manually to staging. At all.

The biggest and most obvious win for automation so far occurred this morning. We had to do a deployment of the same codebase from a year ago today. Our customers were reluctant to allow use to make this deployment in business hours due to the previous disaster. We were able to push back a little though as we knew that this time things were different.

The first big difference is that we knew that what we were deploying to live was what had been running on staging. The mess from a year ago was gone. We had a solid process. We knew what had been running on staging was what was going to be deployed. All we needed to do was tag it and then build and deploy from the tag.

The next big difference is that there were no special tweaks needed to the build for live - it was just a case of change build profile and fire away. This meant that we could script the deploy very easily. So that is what we did.

Our deployment script takes in the tag we are deploying and does the following

- Clone the git repos

- Check out the right (tagged) version

- Put an offline notice on our front end servers

- Take a backup of the old code

- Build and deploy the code

- Put the front end servers back online

And it worked perfectly. Our deployment time went from 3 hours and a rollback to under 10 minutes. We were happy it went well, our cutomers were happy it went well. And more importantly, we are likely to be able to perform more regular releases now we have the process settled.

So what did we learn from all this? There are some things that we should keep humans as far away from as possible. Software deployments are one of them. You should be able to pass a robot your code and let them do the deploy without worrying. If you have to worry, your codebase has problems and you need to fix it. We also learned that if you let people slip into the habit of hoarding changes because nobody else is working on a component things can go downhill very quickly. And then you need to climb back up that hill - which, depending on the personalities involved, can be incredibly hard. Forcing things through code review is one part of climbing that hill, but the reviewers need to be able to reject a request if it isn’t reasonable. If the reviewers feel compelled to accept a merge for whatever reason they are not fulfilling their side of the process. The review needs to be impartial - which in a small team can be hard at first. Once everybody is used to what is going on though, the general quality of code improves and reviews start to feel a more normal part of the process.